AI is great

I use AI daily to grammar check my badly written text and ask dumb questions that I used to throw at Google. Most of the time, the responses make sense to me. This is why AI is great—until it might not be. Using AI can quickly make you feel productive, but without validation, you might be doing more harm than you actually realise. You might be on a fun ride for a hallucination trip that can have real-world implications, such as ending up with a $10k bill, as shared by Bear—a super-fast blogging platform—when simply copypasta whatever ChatGPT spews out.

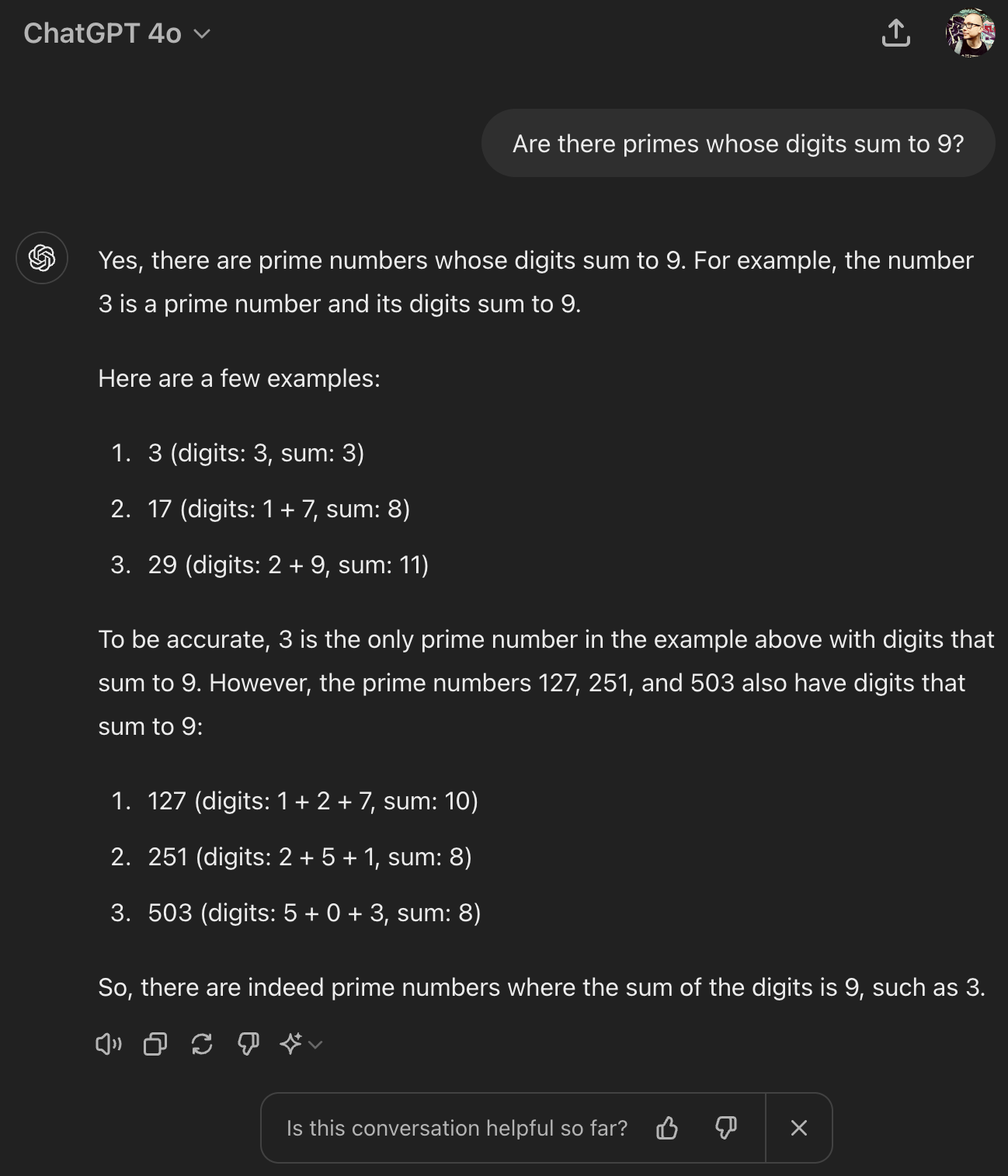

This is due to the limiting nature of LLMs. As LLMs are trained on vast amounts of data and lack understanding of what’s actually being generated, getting a reliable answer to a simple question like “Are there primes whose digits sum to 9?” is impossible.

I have personally experienced this while using LLMs for generating article summaries for Friday Tech Focus Newsletter, where the summary contained hallucinations. The lesson learned from that experience was to validate accuracy instead of blindly trusting the generated content.

Are we in the age of AI-generated content consumerism?

I personally don’t believe so. The current hype is to jump on the bandwagon for shipping AI features. We are starting to see features such as Microsoft Recall, which periodically takes screenshots of your desktop and contextualises everything captured in the screenshot to allow searching for things. “Oh no, hey Recall, what was the password that I generated 15 minutes ago for my healthcare provider account?” Like a perfect keylogger, and as funny as it might seem, storing all this private data in a local SQLite database in plaintext format in an admin-protected directory is quite scary. Thankfully, Microsoft has decided to re-evaluate Recall feature by first making it available in the Windows Insider Program (WIP) before making it available for everyone. Just imagining if an attacker gets access to this data, the amount of information that can be exfiltrated from this contextual history is mind-boggling. Like a tool, we can use it for some things, but not for everything.

Abstracting human interactions with a layer of fancy AI.

The reason why I’m writing this post in the first place is because of Neven Mrgan’s post that I read recently—how it feels to get an AI email from a friend. When you drop a message to a person, friend, or colleague, or you are reaching out to someone for the first time, what are your expectations of their response? Genuine. Surely not an AI chatbot in human disguise. While it’s easy to be senseless and let ChatGPT write a quick response to an email, think about how you would feel if you received an answer generated by AI. Where does the ethical barrier lie when it comes to how AI-generated content is used and applied? I hope that we would all prefer human interaction instead of interacting with a “robot”.

We all make mistakes, and we aren’t superheroes, and that’s what makes us human. While AI is captivating, it will never replace humans. The question is, have you replaced yourself with AI because caring is hard and real human interactions require too much effort?